You can use this model in the Classify Objects Using Deep Learning tool available in the Image Analyst toolbox in ArcGIS Pro. Follow the steps below to use the model for visual question answering in images.

Use the model

Use the following steps to generate a response, given a question based on the imagery:

- Download the HF Visual Question Answering model and add the imagery layer in ArcGIS Pro.

- Zoom to an area of interest.

- Browse to Tools under the Analysis tab.

- Click the Toolboxes tab in the Geoprocessing pane, select Image Analyst Tools, and browse to the Classify Objects Using Deep Learning tool under Deep Learning.

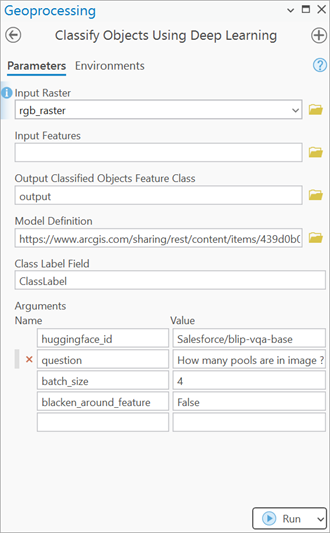

- Set the variables under the Parameters tab as follows:

- Input Raster—Select the imagery.

- Output Classified Objects Feature Class—Set the output feature layer that will contain the generated response from the model.

- Model Definition—Select the pretrained model .dlpk file.

- Class Label Field— The name of the field that will contain the class or category label in the output feature class.

- Arguments—Change the values of the arguments if

required.

huggingface_id—The model id of a pretrained Visual Question Answering model hosted on huggingface.co.

Visual Question Answering models can be filtered by choosing the Visual Question Answering tag in the Tasks list on the Hugging Face model hub, as shown below:

The model id consists of the {username}/{repository} as displayed at the top of the model page, as shown below:

Only those models that have config.json and preprocessor_config.json are supported. The presence of these files can be verified on the Files and versions tab of the model page, as shown below:

- question—The question to be answered based on the image.

- batch_size—Number of image tiles processed in each step of the model inference. This depends on the memory of your graphic card.

- blacken_around_feature—Specifies whether the pixels surrounding each object or feature in an image tile should be masked out. If set to False, the surrounding pixels will not be masked and remain visible. This is the default setting.

- Set the variables under the Environments tab as follows:

- Processing Extent—Select Current Display Extent or any other option from the drop-down menu.

- Cell Size (required)—Set the value as resolution of the imagery. You can keep the default value.

- Processor Type—Select CPU or GPU.

It is recommended that you select GPU, if available, and set GPU ID to the GPU to be used.

- Click Run.

Once processing finishes, the output layer is added to the map.