You can use this model in the Detect Objects Using Deep Learning tool available in the Image Analyst toolbox in ArcGIS Pro. The complete workflow involves preprocessing and postprocessing steps using additional tools. This model can also be fine-tuned using Train Deep Learning Model tool. See Fine-tune the model page for details on how to fine-tune this model.

Detect ships using ArcGIS Project Template

- Download the Detect Ships from SAR ArcGIS Pro project template to use the Ship Detection (SAR) pretrained model or a fine-tuned model.

- Start ArcGIS Pro, and from the project selection screen, choose Select another project template.

- Browse to the downloaded template and click OK.

- Provide a name for the new project and click OK.

- Expand the Sentinel-1 GRDH data folder (.SAFE), right-click the manifest.safe file, and select Add To Current Map.

You can download the imagery from Copernicus Data Space Ecosystem or from Sentinel Hub.

- Zoom to an area of interest.

- Browse to <Project_name>.tbx under Toolboxes in the Catalog pane to access the

Detect Ships using SAR data tool.

- On the Parameters tab, set the variables as follows:

- For Input Raster, select the SAR imagery layer.

- For Output Detected Ships, set the output feature class that will contain the detected ships.

- Optionally, for Input Water Polygon, select the water mask polygon.

- Optionally, for Model Definition select the Ship Detection (SAR) pretrained model .dlpk file.

- Optionally, for Model Arguments, change the values of the arguments if required.

- Optionally, check the Non Maximum Suppression check box to remove the overlapping features with lower confidence.

If checked, do the following:

- Set Confidence Score Field.

- Optionally, set Class Value Field.

- Optionally, set Maximum Overlap Ratio.

- For Processor Type, select CPU or GPU as needed.

If GPU is available, it is recommended that you select GPU and set GPU ID to the GPU to be used.

- Change Cell Size if required.

The expected SAR image resolution is 10 meters.

Note:

If the model definition is not passed, the model will be automatically downloaded from ArcGIS Living Atlas of the World.

- Click Run.

The output layer is added to the map.

You can zoom in to take a closer look at the results.

Detect ships using Detect Objects Using Deep Learning tool

- Expand the Sentinel-1 GRDH data folder (.SAFE), right-click the manifest.safe file, and select Add To Current Map.

You can download the imagery from Copernicus Data Space Ecosystem or from Sentinel Hub.

- To extract the VV band from the manifest.safe file, use the Extract Bands raster function. On the General tab, set the variables as follows:

- For Name, use the default value.

- For Output Pixel Type, select 8 Bit Unsigned from the drop-down list.

- On the Parameters tab, set the variables as follows:

- For Raster, select the IW_manifest raster added to the map or select the manifest.safe file from the source data folder.

- For Method, select the Band IDs option.

For Combination, in the manifest.safe raster band, 1 represents the VV band. The model is compatible with a three-band composite of VV band.

- To create a three-band composite, type 1 1 1.

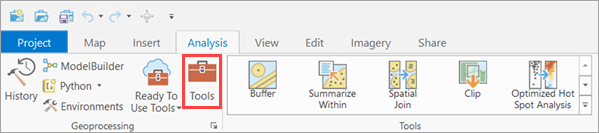

- Click the Analysis tab and browse to Tools.

- In the Geoprocessing pane, click Toolboxes and expand Image Analyst

Tools. Select the Detect Objects Using Deep Learning tool under Deep Learning.

- On the Parameters tab, set the variables as follows:

- For Input Raster, select the three-band composite raster of the VV band.

- For Output Detected Objects, set the output feature class that will contain the detected ships.

- For Model Definition, select the pretrained model .dlpk file.

- For Model Arguments, change the values of the arguments if

required.

- padding—The number of pixels at the border of image tiles from which predictions are blended for adjacent tiles. You can increase its value to smooth the output while reducing edge artifacts. The maximum value of the padding can be half of the tile size value.

- threshold—The detections with a confidence score higher than this threshold are included in the result. The allowed values range from 0 to 1.0.

- nms_overlap—The maximum overlap ratio for two overlapping features, which is defined as the ratio of intersection area over union area. The default is 0.1.

- batch_size—The number of image tiles processed in each step of the model inference. This depends on the memory of your graphics card.

- exclude_pad_detections—If True, this filters potentially truncated detections near the edges that are in the padded region of image chips.

- Optionally, check the Non Maximum Suppression check box to remove the overlapping features with lower confidence.

If checked, do the following:

- Set Confidence Score Field to Confidence.

- Set Class Value Field to Class.

- Set Max Overlap Ratio to 0.2.

- On the Environments tab, set the variables as follows:

- For Processing Extent, select Current Display Extent or any other option from the drop-down menu.

- For Cell Size, set the value to Maximum of Inputs.

The expected raster resolution is 10 meters.

- For Processor Type, select CPU or GPU as needed.

If GPU is available, it is recommended that you select GPU and set GPU ID to the GPU to be used.

- Click Run.

The output layer is added to the map.

You can zoom in to take a closer look at the results.