You can use the Image Interrogation pretrained model in the Classify Objects Using Deep Learning tool from the Image Analyst toolbox in ArcGIS Pro.

Classify imagery

To use Image Interrogation from the imagery, complete the following steps:

- Download the Image Interrogation model.

Note:

This model requires an internet connection if you're using OpenAI's vision language models. The data used for classification, including the imagery and possible class labels, will be shared with OpenAI. However, if you are using the Llama Vision model, it operates locally and does not require an internet connection, ensuring that your data remains on your machine without being shared externally. - Click Add

data to add an image to the Contents pane.

You'll run the prediction on this image.

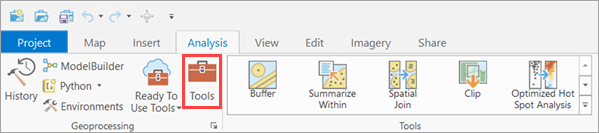

- Click the Analysis tab and click Tools.

- In the Geoprocessing pane, click Toolboxes, expand Image Analyst

Tools, and select the Classify Objects Using Deep Learning tool under Deep Learning.

- On the Parameters tab, set the parameters as follows:

- Input Raster—Choose an input image from the drop-down menu or from a folder location.

- Input Features (optional)—Select the feature layer if you want to limit the processing to specific regions in the raster identified by the feature class.

- Output Classified Objects Feature Class—Set the output feature layer that will contain the labels.

- Model Definition—Select the pretrained model .dlpk file.

- Arguments (optional)—Change the values of the arguments if

required.

- prompt—Provide the prompt to ask any question related to the image, for example, Describe the image.

- ai_connection_file—This is the path to the JSON file that includes the connection details of the model that will be used. The file can have extension .ais (AI Service Connection file) or .json. Currently, the OpenAI vision language models deployed on OpenAI or Azure, and the Llama Vision models installed locally on the machine are supported. This provides flexibility to choose between cloud-based solutions with OpenAI or locally deployed models with Llama Vision. The path of the file should be from the same machine where the geoprocessing tool is being run. The following is an example of how the connection file JSON would look for an OpenAI Azure deployment instance:

OpenAI Azure connection JSON file

{ "service_provider" : "AzureOpenAI", "api_key" : "YOUR_API_KEY", "azure_endpoint" : "YOUR_AZURE_ENDPOINT", "api_version" : "YOUR_API_VERSION", "deployment_name" : "YOUR_DEPLOYMENT_NAME" }To connect directly to OpenAI, set the service_provider to "OpenAI" and provide your OpenAI API key using the api_key field. You must also specify the model to use by setting the deployment_name field (e.g., "gpt-4o" or "gpt-4"). The remaining parameters can be left blank when connecting directly to OpenAI, as they are only required when using OpenAI services hosted on Azure.

To use Llama Vision without cloud-based services, follow these steps to download the model weights:

- Create a Hugging Face account at https://huggingface.co/join

- Open a Python command prompt and run huggingface-cli login. This will prompt you for an access token, which you can get from https://huggingface.co/settings/tokens.

Note:

To use huggingface-cli you must have Git installed on your machine. - Visit https://huggingface.co/meta-llama/Llama-3.2-11B-Vision-Instruct and accept the terms to gain access to the model.

- Run huggingface-cli download meta-llama/Llama-3.2-11B-Vision-Instruct in the Python command prompt to download the model.

Once the weights are downloaded, you can modify the connection JSON to use Llama Vision as follows:

Llama Vision connection JSON file

{ "service_provider" : "local-llama", }

- On the Environments tab, set the environments as follows:

- Processing Extent—Select the default extent or any other option from the drop-down menu.

- Cell Size—Set the value appropriately. Select the cell size in meters in such a way that maximizes the visibility of the objects of interest throughout the chosen extent. Consider a larger cell size for detecting larger objects and a smaller cell size for detecting smaller objects. For example, set the cell size for cloud detection to 10 meters, and for car detection, set it to 0.30 meters (30 centimeters). For more information about cell size, see Cell size of raster data.

- Processor Type—Select CPU or GPU as needed.

Note:

Llama Vision model supports inference only on GPUs; CPU-based inference is not supported.

Therefore, It is recommended that you select GPU if available, and set GPU ID to specify the GPU to be used.

- Click Run.

As soon as processing finishes, the output layer is added to the map, and the predicted classes are added to the attribute table of the output layer.