Available in big data analytics.

Available in big data analytics.

The Forest-based Classification and Regression tool  creates models and generates predictions using an adaptation of Leo Breiman's random forest algorithm, which is a supervised machine learning method. Predictions can be performed for categorical variables (classification) and continuous variables (regression). Explanatory variables can take the form of fields in the attribute table of the training features. In addition to validation of model performance based on the training data, predictions can be made to features.

creates models and generates predictions using an adaptation of Leo Breiman's random forest algorithm, which is a supervised machine learning method. Predictions can be performed for categorical variables (classification) and continuous variables (regression). Explanatory variables can take the form of fields in the attribute table of the training features. In addition to validation of model performance based on the training data, predictions can be made to features.

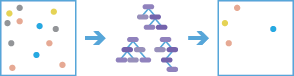

Workflow diagram

Examples

The following are example uses of the Forest-based Classification and Regression tool:

- Given data on occurrence of seagrass, as well as a number of environmental explanatory variables, in addition to distances to upstream factories and major ports, future seagrass occurrence can be predicted based on projections for those same environmental explanatory variables.

- Housing values can be predicted based on the prices of houses that have been sold in the current year. The sale price of homes sold along with information about the number of bedrooms, distance to schools, proximity to major highways, average income, and crime counts can be used to predict sale prices of similar homes.

- Given information on the blood lead level in children and the tax parcel ID of their residence, combined with parcel-level attributes such as age of home, census-level data such as income and education levels, and national datasets reflecting toxic release of lead and lead compounds, the risk of lead exposure for parcels without blood lead level data can be predicted. These risk predictions can inform policies and education programs in the area.

Usage notes

Keep the following in mind when working with the Forest-based Classification and Regression tool:

- This

tool can be configured to perform in one of two operational methods:

- Method 1—If only target (training) data is provided, the tool will train a model to assess model performance. This option can be used to evaluate the performance of a configuration as you explore different explanatory variables and tool settings.

- Method 2—Once a good model and explanatory variables are identified, configure a model to also provide join (prediction) data. When join (prediction) data is configured, the tool will predict values for the variable specified for features in your join (prediction) data based on the mapped explanatory variables.

- Use the Variable to predict parameter to select a field from the target input pipeline (training data) representing the phenomena being modeled. Use the Explanatory variable(s) parameter to select one or more fields representing the explanatory variables from the target input pipeline (training data). These fields must be numeric or categorical and have a range of values. Features that contain missing values in the dependent or explanatory variable will be excluded from the analysis. If modifying null values, use the Calculate Field tool prior to updating the values.

- Explanatory variables can come from fields and should contain a variety of values. If the explanatory variable is categorical, the Categorical checkbox should be checked. Categorical explanatory variables are limited to 60 unique values, though a smaller number of categories will improve model performance. For a given data size, the more categories a variable contains, the more likely it is that it will dominate the model and lead to less effective prediction results.

- When matching explanatory variables, the target (training data) field and join (prediction data) field must have fields that are the same type, a double field in the training field must be matched to a double field in the prediction field, for example.

- Forest-based models do not extrapolate; they can only classify or predict to a value on which the model was trained. Train the model with training features and explanatory variables that are within the range of the target features and variables. The tool will fail if categories exist in the prediction explanatory variables that were not present in the training features.

- The default value for the Number of trees parameter is 100. Increasing the number of trees in the forest model will result in more accurate model prediction, but the model will take longer to calculate.

- The Forest-based Classification and Regression tool also produces output features and diagnostics. Output feature layers automatically have a rendering scheme applied. A full explanation of each output is available below in Output layer.

- Features with one or more null values or empty string values in prediction or explanatory fields will be excluded from the output. If necessary, modify values using the Calculate Field tool.

- To learn more about how this tool works, and the ArcGIS Pro geoprocessing tool on which this implementation is based, see How Forest-based Classification and Regression works.

Parameters

The following are the parameters for the Forest-based Classification and Regression tool:

| Parameter | Description | Data type |

|---|---|---|

Target Input Layer (training data) | The training features used to generate a model. The pipeline containing the Variable to predict parameter and the explanatory training variables fields. | Features |

Join Input Layer (prediction data) (Optional) | The prediction features for which the variable to predict will be predicted based on the specified explanatory variables and parameters. This parameter is optional. If not specified, the Forest-based Classification and Regression tool will fit a model to assess model performance based on the training data. | Features |

Variable to predict | The variable from the Target Input Layer (training data) pipeline containing the values to be used to train the model. This field contains known (training) values of the variable that will be used to predict at unknown locations. | FieldName |

Treat variable as categorical | Specifies whether variable to predict is a categorical variable.

| Boolean |

Explanatory variable(s) | A list of fields representing the explanatory variables that help predict the value or category of Variable to predict. Check the Categorical check box for any variables that represent classes or categories such as land cover or presence or absence. | ExplanatoryVariablesConfiguration |

Explanatory variable mapping (prediction only) | Maps the selected explanatory variable field names in the target (training) schema to corresponding field names in join (predict) schema. This parameter is optional. Explanatory variable mappings are only required to be specified if join (prediction) data is specified. | ExplanatoryVariableMappings |

Number of trees | The number of trees to create in the forest model. More trees will generally result in more accurate model prediction, but the model will take longer to calculate. The default number of trees is 100. | Integer |

Minimum leaf size | The minimum number of observations required to keep a leaf (that is, the terminal node on a tree without further splits). The default minimum for regression is 5 and the default for classification is 1. For very large data, increasing these values will decrease the run time of the tool. | Integer |

Maximum tree depth | The maximum number of splits to be made down a tree. Using a large maximum depth, more splits will be created, which may increase the chances of overfitting the model. The default is data-driven and depends on the number of trees created and the number of variables included. | Integer |

Sample size | The percentage of Target Input Layer (training data) used for each decision tree. The default is 100 percent of the data. Samples for each tree are taken randomly from two-thirds of the data specified. Each decision tree in the forest is created using a random sample or subset (approximately two-thirds) of the training data available. Using a lower percentage of the input data for each decision tree increases the speed of the tool for very large datasets. | Integer |

Random variables | The number of explanatory variables used to create each decision tree. Each decision tree in the forest is created using a random subset of the explanatory variables specified. Increasing the number of variables used in each decision tree will increase the chances of overfitting the model, particularly if there is one or more dominant variables. A common practice is to use the square root of the total number of explanatory variables if variable to predict is numeric or divide the total number of explanatory variables by 3 if variable to predict is categorical. | String |

Percentage for validation | The percentage (between 10 percent and 50 percent) of target input training features to reserve as the test dataset for validation. The model will be trained without this random subset of data and the observed values for those features will be compared to the predicted values. The default is 10 percent. | Integer |

Output layer

The Forest-based Classification and Regression tool produces a variety of outputs. A summary of the Forest-based Classification and Regression model and statistical summaries are available on the item details page of the output feature layer or in the analytic logs.

If implementing method 1 above to train a model to assess model performance (only training data provided to the tool), the tool produces the following two outputs:

- Output trained features—Contains all of the training features (target schema) used in the model created as well as all of the explanatory variables used in the model. It also contains predictions for all of the features used for training the model, which can be helpful in assessing the performance of the model created.

- Tool summary messages—Messages to help understand the performance of the model created. The messages include information on the model characteristics, out-of-bag errors, variable importance, and validation diagnostics. To access the summary of your results, either view analytic logs or the feature layer output item details page, where the summary information is also available.

If implementing method 2 above to fit a model and predict values (training and prediction data provided to the tool), the tool produces the following two outputs:

- Output predicted features—A layer of predicted results. Predictions are applied to the layer to predict (predict values for join schema data) using the model generated from the training layer.

- Tool summary messages—Messages to help understand the performance of the model created. The messages include information on the model characteristics, out-of-bag errors, variable importance, and validation diagnostics. To access the summary of your results, either view analytic logs or the feature layer output item details page, where the summary information is also available.

Considerations and limitations

A single data pipeline for training data and a single data pipeline for prediction data are supported.