ArcGIS Velocity ingests data for real-time and big data analytics using feeds or data sources. A feed type is a real-time stream of data coming from a data source that loads static or near real-time data once the real-time analytics starts, making it available for rapid joins, enrichment, and geofencing.

You can use a feed to leverage real-time data or use a feed as join data for analytic tools in real-time analytics.

Velocity provides a streamlined and contextual workflow to optimize your experience when configuring input data from a feed or data source. This configuration workflow is common across the various feed and source types.

Set connection and configuration parameters

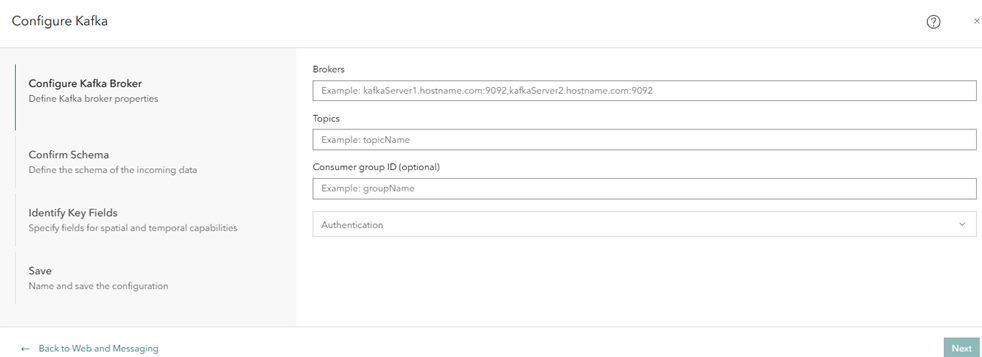

The first step when configuring a feed or data source is to define the required connection and configuration parameters so that Velocity can connect to the data. The available parameters depend on the feed or data source type.

For example, when configuring a Kafka feed, fill in the Broker and Topic parameters to connect to the data. When configuring an Amazon S3 data source, you must provide all relevant connection parameter values to establish a successful connection.

Next, Velocity validates the connection using the configuration parameters provided. Velocity then attempts to sample the data and derive the schema of the data. If the connection is not successful and the schema is not successfully derived, update the configuration parameters accordingly and try again.

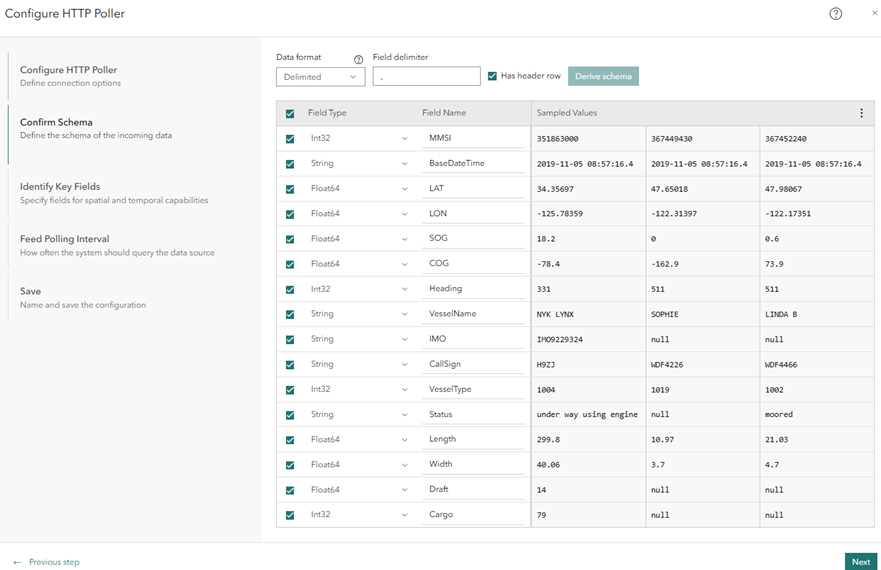

Confirm schema

The Confirm Schema step displays the returned schema and a data sample. Depending on the data format, more parameters are available to adjust data parsing to a valid schema.

For this step, you can review and adjust the field names, field types, and data formats. You can also derive the data again to acquire new samples or derive schema after making adjustments to the data format or the data format parameters. This ensures that Velocity can identify the format of the data being ingested by the feed or data source.

Automatic sampling and schema derivation

For the Confirm Schema step, Velocity connects to the specified feed or data source using the connection and configuration parameters set in the previous step and retrieves sample data.

From the sample data, Velocity automatically derives the data format and the schema, which consists of the field names and field types. For some data formats, the geometry and date and time fields are also identified.

Change field types and field names

Velocity displays field types and field names as identified by schema derivation based on the acquired data sample.

To make adjustments to the derived schema, complete the following steps:

- Modify the data format parameters and resample the schema before adjusting the field types and field names.

If the data format or data format parameters are changed and schema derivation is required, any changes that were made are overwritten.

- To change a field type, click the drop-down arrow next to the field name and choose another field type.

Caution:

You cannot change field types when using certain feed or data source types such as feature layer feeds or data sources. Use caution when changing the field type. Keep the following in mind:

- Any field type can be changed to a string type field; however, if you attempt to change a string type field containing letters to an integer type field, an error occurs during data ingestion.

- Changing fields from a float type (Float32 or Float64) to an integer type (Int32 or Int64) is not recommended. Changing field types is not intended for on-the-fly conversion of numerical values. For some formats, downgrading from a float to an integer can cause the value to be skipped entirely.

- Change the field names as necessary.

- To unselect a field, uncheck the check box next to the field type.

The field is ignored when data is ingested from the source. A best practice is to uncheck any unnecessary fields for velocity and volume performance considerations.

Change the data format and data format parameters

Velocity can consume data from various feed and data source types in a variety of data formats. Some feed and data source types, such as HTTP Poller, can consume data in various formats. Other feed and data source types, such as Feature Layer, have a fixed data format.

The following data formats are supported:

- Delimited

- JSON

- GeoJSON

- Esri JSON

- RSS

- GeoRSS

- Shapefile (only available for Amazon S3 and Azure Blob Store data sources)

- Parquet (only available for Amazon S3 and Azure Blob Store data sources)

Velocity automatically attempts to derive the format of the data. However, you can change the derived data format as necessary.

Additionally, some data formats have parameters that you can adjust regarding how Velocity parses the data into a schema. For example, the delimited data format has two parameters: Field delimiter and Has header row.

Change data format parameters and derive schema

Using the derived data sample, Velocity attempts to define the format, schema, and parameters of the data.

You can modify the data format parameters or specify a different data format. To do this, change the data format property and click the Derive schema option to derive the data again according to the changes you made. The parameters update accordingly based on the derived data.

For example, if you're connecting to a JSON source with multilevel nested JSON and you only want to collect data from a specific JSON node, or you want to flatten multilevel JSON to retrieve all attribute values, you can use the Root node, Flatten, Flatten arrays, and Retain element(s). parameters to configure Velocity to interact with the JSON data directly.

Sampled data is not returned

If sampled data is not returned in Velocity, do any of the following:

- Verify that the connection and configuration parameters are correct.

- Click the Derive schema option to resample when data is flowing or available.

- Provide samples by copying records.

Samples can be reviewed for their data format and to derive a valid schema.

- Manually define the format and schema of the data.

Identify key fields

The next step in configuring input data for the new feed or data source is to identify key fields. Key fields are used to parse feature geometry from fields, construct dates from strings, specify start and end time fields, and designate a field as a track ID.

Location

For many feed and data source types, you must define how Velocity determines the geometry of features from observations or records. Geometry can be defined using a single geometry field or X/Y fields. Alternatively, you can load tabular data without location and not specify geometry fields.

Date and time

The features in a feed or data source can have date and time fields available. If you specify that the data has date fields, you also need to specify the date format. The options are Epoch milliseconds, Epoch seconds, and Other (string). If you choose Other (string), you must specify a Date Formatting String value so Velocity can parse the string into a date.

Additionally, you can choose a key field for the Start time (optional) option. You do not need to set a start time or end time to analyze and process data. However, some tools in real-time and big data analytics require a start time or a start time and an end time to be identified to perform temporal analysis.

Tracking

The key field set for the Track ID option is a unique identifier in the data that relates features to specific entities. For example, a truck can be identified by its license plate number or an aircraft by an assigned flight number. These identifiers can be used as track IDs to track the features associated with a particular real-world entity or a set of incidents.

You do not need to set a track ID field to analyze and process data. However, some tools in real-time and big data analytics require a track ID to be identified for the feed or data source.

Schedule polling interval

While many feeds stream data, some feed types require the data to be retrieved at regular intervals. The defined interval determines how often the feed connects to the source to retrieve data. You can set a polling interval for the following feed types:

Learn more about scheduling a feed polling interval

Note:

When setting up the Velocity feeds, the recurrence value for seconds can be set to a factor of 60 (between 10 and 30) for a consistent and predictable runtime.

Save

The final step is to provide a feed name and, optionally, a feed summary; then save the feed.