Every ArcGIS Drone2Map project includes a detailed processing report that displays the results. To access the report once the initial processing step is completed, on the Home tab, in the Processing section, click Report. You can also access the processing report at any time in the project folder in PDF and HTML format. The processing report includes the following sections:

Summary

Project | Name of the project. |

Processed | Date and time of processing. |

Camera Model Name | The name of the camera model used to capture the images. |

Average Ground Sampling Distance (GSD) | The average GSD of the initial images. |

Area Covered | The 2D area covered by the project. This area is not affected if a smaller orthomosaic area has been drawn. |

Time for Initial Processing (without report) | The time for initial processing without taking into account the time needed for the generation of the processing report. |

Quality Check

Images

The median of keypoints per image. Keypoints are characteristic points that can be detected on the images. | ||

| Keypoints Image Scale > 1/4: More than 10,000 keypoints have been extracted per image. Keypoints Image Scale ≤ 1/4: More than 1,000 keypoints have been extracted per image. | Images have enough visual content to be processed. |

| Keypoints Image Scale > 1/4: Between 500 and 10,000 keypoints have been extracted per image. Keypoints Image Scale ≤ 1/4: Between 200 and 1,000 keypoints have been extracted per image. | Not much visual content could be extracted from the images. This may lead to a low number of matches in the images and incomplete reconstruction or low-quality results. This may occur due to the following factors:

|

| Keypoints Image Scale > 1/4: Fewer than 500 keypoints have been extracted per image. Keypoints Image Scale ≤ 1/4: Fewer than 200 keypoints have been extracted per image. Failed Processing Report: Displays if the information is not available. | What to do: Same as above, increasing the overlap (>90%). |

Dataset

The number of enabled images that have been calibrated, that is, the number of images that have been used for the reconstruction of the model. If the reconstruction results in more than one block, the number of blocks is displayed. This section also shows the number of images that have been disabled by the user. If processing fails, the number of enabled images is displayed. | ||

| More than 95 percent of enabled images are calibrated in one block. | All or almost all images have been calibrated in a single block. |

| Between 60 percent and 95 percent of enabled images are calibrated, or more than 95 percent of enabled images are calibrated in multiple blocks. | Many images have not been calibrated (A) or multiple blocks have been generated (B). Uncalibrated images are not used for processing. This may occur due to the following factors:

Multiple blocks: A block is a set of images that were calibrated together. Multiple blocks indicate that there were not enough matches between the different blocks to provide a global optimization. The different blocks might not be perfectly georeferenced with respect to each other. Capturing new images with more overlap may be required. |

| Less than 60 percent of enabled images are calibrated. Failed Processing Report: Always displayed as the information is not available. | What to do: Same as above. Such a low score may also indicate a severe problem in the following areas:

|

Camera optimization

Perspective lens: The percentage of difference between initial and optimized focal length. Fish-eye lens: The percentage of difference between the initial and optimized affine transformation parameters C and F. The software can read from the EXIF data the focal length and the number of pixels from the sensor (pixel*pixel), but it cannot always read the correct pixel size to calculate the sensor size (mm*mm). This is why the software supposes that the sensor size is 36*24 mm. It supposes that the images have the 35 mm equivalent sensor size. Starting with initial value, the focal length that is read at the EXIF or given by the user, it recalculates the most appropriate one for the 36*24 mm sensor size. | ||

| Perspective lens: The percentage of difference between initial and optimized focal length. Fish-eye lens: The percentage of difference between the initial and optimized affine transformation parameters C and F. | The focal length and affine transformation parameters are a property of the camera's sensor and optics. It varies with temperature, shocks, altitude, and time. The calibration process starts from an initial camera model and optimizes the parameters. It is normal that the focal length and affine transformation parameters are slightly different for each project. An initial camera model should be within 5% of the optimized value to ensure a fast and robust optimization. |

| Perspective lens: The percentage of difference between initial and optimized focal length. Fish-eye lens: The percentage of difference between the initial and optimized affine transformation parameters C and F. | What to do:

|

| Perspective lens: The percentage of difference between initial and optimized focal length is more than 20 percent. Fish-eye lens: The percentage of difference between initial and optimized affine transformation parameters C and F is more than 20 percent. Failed Processing Report: Always displays as the information is not available. | What to do: same as above. |

Matching

The median of matches per calibrated image. | ||

| Keypoints Image Scale > 1/4: More than 1,000 matches have been computed per calibrated image. Keypoints Image Scale ≤ 1/4: More than 100 matches have been computed per calibrated image. | This indicates that the results are likely to be of high quality in the calibrated areas. Figure 5 of the Quality Report is useful to assess the strength and quality of matches. |

| Keypoints Image Scale > 1/4: Between 100 and 1,000 matches have been computed per calibrated image. Keypoints Image Scale ≤ 1/4: Between 50 and 100 matches have been computed per calibrated image. | Low number of matches in the calibrated images may indicate that the results are not very reliable: changes in the initial camera model parameters or in the set of images may lead to improvements in the results. Figure 5 of the Quality Report shows the areas with very weak matches. A low number of matches is very often related to low overlap between the images. What to do: See the Dataset Quality Check section to improve the results. You may need to restart the calibration a few times with different settings (camera model, Manual Tie Points) to get more matches. To avoid this situation, it is recommended to acquire images with more systematic overlap. |

| Keypoints Image Scale > 1/4: Fewer than 100 matches have been computed per calibrated image. Keypoints Image Scale ≤ 1/4: Fewer than 50 matches have been computed per calibrated image. Failed Processing Report: Displays if the information is not available. | What to do: Same as above. The minimum number of matches to calibrate an image is 25. |

Georeferencing

Displays whether the project is georeferenced or not. If it is georeferenced, it displays what has been used to georeference the project:

If processing fails, the number of GCPs defined in the project is displayed. | ||

| GCPs are used and the GCP error is less than the average GSD. | For optimal results, GCPs should be well distributed over the dataset area. Optimal accuracy is usually obtained with 5 - 10 GCPs. |

| GCPs are used and the GCP error is less than two times the average GSD. or No GCPs are used. Failed Processing Report: Always displays whether GCPs are used or not. | GCPs are used: GCPs might not have been marked very precisely. Verify the GCPs marks and if needed, add more marks in more images. If possible, select images with a big base (distance), as it helps to compute the GCPs 3D position more precisely. No GCPs are used: There are two cases in which No GCPs are displayed:

|

| GCPs are used and the GCP error is more than two times the average GSD. | A GCP error superior to two times the Ground Sampling Distance may indicate a severe issue with the dataset or more likely an error when marking or specifying the GCPs. |

Preview

Displays a preview of the orthomosaic and the corresponding sparse digital surface model before densification.

Calibration Details

Number of Calibrated Images | The number of the images that have been calibrated. These are the images that have been used for the reconstruction, with respect to the total number of the images in the project (enabled and disabled images). |

Number of Geolocated Images | The number of the images that are geolocated. |

Initial Image Positions

Displays a graphical representation of the top view of the initial image position. The graph should correspond to the flight plan.

Computed Image/GCP Positions

Displays a graphical representation of the offset between initial (blue dots) and computed (green dots) image positions as well as the offset between the GCPs' initial positions (blue crosses) and their computed positions (green crosses) in the top view (XY plane), front view (XZ plane), and side view (YZ plane). Dark green ellipses indicate the absolute position uncertainty (Nx magnified) of the bundle block adjustment result.

Images

There might be a small offset between the initial and computed image positions because of image geolocation synchronization issues or GPS noise. If the offset is very high for many images, it may affect the quality of the reconstruction and may indicate severe issues with the image geolocation (missing images, wrong coordinate system, or coordinate inversions).

A bended/curved shape in the side and front view may indicate a problem in the camera parameters optimization. Ensure that the correct camera model is used. If the camera parameters are wrong, correct them and reprocess. If they are correct, the camera calibration can be improved by doing the following:

- Increasing overlap/image quality.

- Removing ambiguous images (shot from same position, takeoff or landing, too much angle, image quality too low).

- Introducing ground control points.

GCPs/Check Points

An offset between initial and computed position may indicate severe issues with the geolocation due to wrong GCP/Check Point initial positions, wrong coordinate system or coordinate inversions, wrong marks on the images, or wrong point accuracy.

Uncertainty Ellipses

The absolute size of the uncertainty ellipses does not indicate their absolute value because they have been magnified by a constant factor noted in the figure caption. In projects with GCPs, the uncertainty ellipses close to the GCPs should be very small and increase for images farther away. This can be improved by distributing the GCPs homogeneously in the project.

In projects only with image geolocation, all ellipses should be similar in size. Exceptionally large ellipses may indicate calibration problems of a single image or all images in an area of the project. This can be improved by doing the following:

- Rematching and optimizing the project.

- Removing images of low quality.

Absolute camera position and orientation uncertainties

In projects with only image geolocation, the absolute camera position uncertainty should be similar to the expected GPS accuracy. As all images are positioned with similar accuracy, the sigma reported in the table should be small compared to the mean. In such projects, the absolute camera position uncertainties may be bigger than the relative ones in the table Relative position and orientation uncertainties.

In projects with GCPs, a large sigma can signify that some areas of the project (typically those far away from any GCPs) are less accurately reconstructed and may benefit from additional GCPs.

Mean X/Y/Z: | Mean uncertainty in the X/Y/Z direction of the absolute camera positions. |

Mean Omega/Phi/Kappa: | Mean uncertainty in the omega/phi/kappa orientation angle of the absolute camera positions. |

Sigma X/Y/Z: | Sigma of the uncertainties in the X/Y/Z direction of the absolute camera positions. |

Sigma Omega/Phi/Kappa: | Sigma of the uncertainties in the omega/phi/kappa angle of the absolute camera positions. |

Overlap

Displays the number of overlapping images computed for each pixel of the orthomosaic. Red and yellow areas indicate low overlap for which poor results may be generated. Green areas indicate an overlap of over five images for every pixel. Good-quality results will be generated as long as the number of keypoint matches is also sufficient for these areas (refer to keypoint matches).

Bundle Block Adjustment Details

Number of 2D Keypoint Observations for Bundle Block Adjustment | The number of automatic tie points on all images that are used for the automatic aerial triangulation (AAT) or bundle block adjustment (BBA). It corresponds to the number of all keypoints (characteristic points) that could be matched in at least two images. |

Number of 3D Points for Bundle Block Adjustment | The number of all 3D points that have been generated by matching 2D points on the initial images. |

Mean Reprojection Error | The average of the reprojection error in pixels. Each computed 3D point has initially been detected on the images (2D keypoint). On each image, the detected 2D keypoint has a specific position. When the computed 3D point is projected back to the images, it has a reprojected position. The distance between the initial position and the reprojected one gives the reprojection error. |

Internal Camera Parameters for Perspective Lens

Camera model name + sensor dimensions | The camera model name is also displayed, as well as the sensor dimensions. |

EXIF ID | The EXIF ID to which the camera model is associated. |

Initial Values | The initial values of the camera model. |

Optimized Values | The optimized values that are computed from the camera calibration and that are used for the processing. |

Uncertainties (Sigma) | The sigma of the uncertainties of the focal length, the Principal Point X, the Principal Point Y, the Radial Distortions R1, R2 and the Tangential Distortions T1, T2. |

Focal Length | The focal length of the camera in pixels and in millimeters. If the sensor size is the real one, the focal length should be the real one. If the sensor size is given as 36 by 24 mm, the focal length should be the 35 mm equivalent focal length. |

Principal Point x | The x image coordinate of the principal point in pixels and in millimeters. The principal point is located around the center of the image. |

Principal Point y | The y image coordinate of the principal point in pixels and in millimeters. The principal point is located around the center of the image. |

R1 | Radial distortion of the lens R1. |

R2 | Radial distortion of the lens R2. |

R3 | Radial distortion of the lens R3. |

T1 | Tangential distortion of the lens T1. |

T2 | Tangential distortion of the lens T2. |

Residual Lens Error | This figure displays the residual lens error. The number of Automatic Tie Points (ATPs) per pixel averaged over all images of the camera model is color coded between black and white. White indicates that, on average, more than 16 ATPs are extracted at this pixel location. Black indicates that, on average, no ATPs have been extracted at this pixel location. Click the image to see the average direction and magnitude of the reprojection error for each pixel. Note that the vectors are scaled for better visualization. |

Internal Camera Parameters for Fisheye Lens

Camera model name + sensor dimensions | The camera model name is also displayed, as well as the sensor dimensions. |

EXIF ID | The EXIF ID to which the camera model is associated. |

Initial Values | The initial values of the camera model. |

Optimized Values | The optimized values that are computed from the camera calibration and that are used for the processing. |

Uncertainties (Sigma) | The sigma of the uncertainties of the Polynomial Coefficient 1,2,3,4 and the Affine Transformation parameters C,D,E,F. |

Poly[0] | Polynomial coefficient 1 |

Poly[1] | Polynomial coefficient 2 |

Poly[2] | Polynomial coefficient 3 |

Poly[3] | Polynomial coefficient 4 |

c | Affine transformation C |

d: | Affine transformation D |

e: | Affine transformation E |

f: | Affine transformation F |

Principal Point x | The x image coordinate of the principal point in pixels. The principal point is located around the center of the image. |

Principal Point y | The y image coordinate of the principal point in pixels. The principal point is located around the center of the image. |

Residual Lens Error | This figure displays the residual lens error. The number of Automatic Tie Points (ATPs) per pixel averaged over all images of the camera model is color coded between black and white. White indicates that, on average, more than 16 ATPs are extracted at this pixel location. Black indicates that, on average, no ATPs have been extracted at this pixel location. Click the image to see the average direction and magnitude of the reprojection error for each pixel. Note that the vectors are scaled for better visualization. |

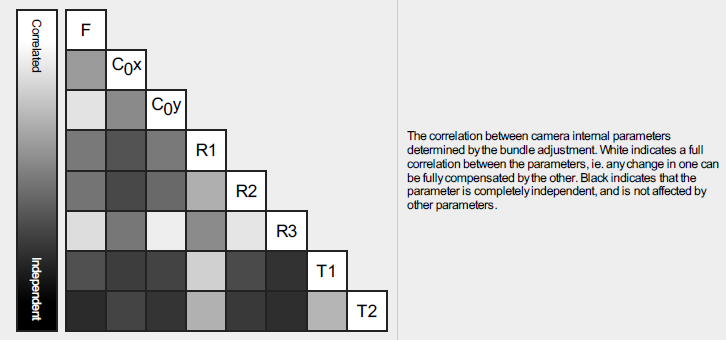

Internal Camera Parameters Correlation

The correlation between camera internal parameters determined by the bundle adjustment. The correlation matrix displays how much the internal parameters compensate for each other.

White indicates a full correlation between the parameters, in other words, any change in one can be fully compensated by the other. Black indicates that the parameter is completely independent, and is not affected by other parameters.

Note:

The graphic is only available in the PDF version of the Processing Report.

2D Keypoints Table

Number of 2D Keypoints per Image | The number of 2D keypoints (characteristic points) per image. |

Number of Matched 2D Keypoints per Image | The number of matched 2D keypoints per image. A matched point is a characteristic point that has initially been detected on at least two images (a 2D keypoint on these images) and has been identified to be the same characteristic point. |

Median | The median number of the above mentioned keypoints per image. |

Min | The minimum number of the above mentioned keypoints per image. |

Max | The maximum number of the above mentioned keypoints per image. |

Mean | The mean or average number of the above mentioned keypoints per image. |

2D Keypoints Table for Camera

Camera model name | If more than one camera model is used, the number of 2D keypoints found in images associated to a given camera model name is displayed. |

Number of 2D Keypoints per Image | Number of 2D keypoints (characteristic points) per image. |

Number of Matched 2D Keypoints per Image | Number of matched 2D keypoints per image. A matched point is a characteristic point that has initially been detected on at least two images (a 2D keypoint on these images) and has been identified to be the same characteristic point. |

Median | The median number of the above mentioned keypoints per image. |

Min | The minimum number of the above mentioned keypoints per image. |

Max | The maximum number of the above mentioned keypoints per image. |

Mean | The mean or average number of the above mentioned keypoints per image. |

3D Points from 2D Keypoint Matches

Number of 3D Points Observed in N Images | Each 3D point is generated from keypoints that have been observed in at least two images. Each row of this table displays the number of 3D points that have been observed in n images. The higher the image number in which a 3D point is visible, the higher its accuracy is. |

2D Keypoint Matches

Displays a graphical representation of the top view of the image-computed positions with a link between matching images. The darkness of the links indicates the number of matched 2D keypoints between the images. Bright links indicate weak links and require manual tie points or more images.

Relative camera position and orientation uncertainties

Mean X/Y/Z | Mean uncertainty in the X/Y/Z direction of the relative camera positions. |

Mean Omega/Phi/Kappa | Mean uncertainty in the omega/phi/kappa orientation angle of the relative camera positions. |

Sigma X/Y/Z | Sigma of the uncertainties in the X/Y/Z direction of the relative camera positions. |

Sigma Omega/Phi/Kappa | Sigma of the uncertainties in the omega/phi/kappa angle of the relative camera positions. |

Geolocation Details

Ground Control Points

This section displays if GCPs have been used. GCPs are used to assess and correct the georeference of a project.

If some of your control points are designated as checkpoints, you will see two tables. The first table will have GCP Name in the first column, as shown in the table below. The second table will have Check Point Name in the first column and will contain the same information as the GCP table.

GCP Name | The name of the GCP together with the GCP type. The type can be one of the following:

|

Accuracy X/Y/Z [m] | The percentage of images with geolocation errors in x direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Error X [m] | The percentage of images with geolocation errors in y direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Error Y [m] | The percentage of images with geolocation errors in z direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Error Z [m] | The mean or average error in each direction (X,Y,Z). |

Projection error [pixel] | Average distance in the images where the GCP/Check Point has been marked and where it has been reprojected. |

Verified/Marked | Verified: The number of images on which the GCP/Check Point has been marked and are taken into account for the reconstruction. Marked: The images on which the GCP/Check Point has been marked. |

Mean [m] | The mean or average error in each direction (X,Y,Z). |

Sigma [m] | The standard deviation of the error in each direction (X,Y,Z). |

RMS Error [m] | The Root Mean Square error in each direction (X,Y,Z). |

Absolute Geolocation Variance

The number of geolocated and calibrated images that have been labeled as inaccurate. The input coordinates for these images are considered as inaccurate. Their correct optimized positions were found, but they are not taken into account for the following Geolocation Variance tables. | |

Min Error [m]/Max Error [m] | The minimum and maximum error represent the geolocation error intervals between -1.5 and 1.5 times the maximum accuracy (of all X,Y,Z directions) of all the images. |

Geolocation Error X [%] | The percentage of images with geolocation errors in x direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Geolocation Error Y [%] | The percentage of images with geolocation errors in y direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Geolocation Error Z [%] | The percentage of images with geolocation errors in z direction within the predefined error intervals. The geolocation error is the difference between the camera initial geolocations and their computed positions. |

Mean | The mean or average error in each direction (X,Y,Z). |

Sigma | The standard deviation of the error in each direction (X,Y,Z). |

RMS error | The root mean square (RMS) error in each direction (X,Y,Z). |

Relative Geolocation Variance

Relative Geolocation Error | The relative geolocation error for each direction is computed as follows:

Where:

The goal is to verify if the relative geolocation error follows a Gaussian distribution. If it does, the following is true:

|

Images X [%] | The percentage of geolocated and calibrated images with a relative geolocation error in X between -1 and 1, -2 and 2, and -3 and 3. |

Images Y [%] | The percentage of geolocated and calibrated images with a relative geolocation error in Y between -1 and 1, -2 and 2, and -3 and 3. |

Images Z [%] | The percentage of geolocated and calibrated images with a relative geolocation error in Z between -1 and 1, -2 and 2, and -3 and 3. |

Mean of Geolocation Accuracy [m] | The mean or average accuracy set by the user in each direction (X,Y,Z). |

Sigma of Geolocation Accuracy [m] | The standard deviation of the accuracy set by the user in each direction (X,Y,Z). |

Initial Processing Details

System Information

Hardware | CPU, RAM, and GPU used for processing. |

Operating System | Operating system used for processing. |

Coordinate Systems

Image Coordinate System | Coordinate system of the image geolocation. |

Ground Control Point (GCP) coordinate system | Coordinate system of the GCPs, if GCPs are used. |

Output Coordinate System | Output coordinate system of the project. |

Processing Options

Detected template | Processing Option Template, if a template has been used. |

Keypoints Image Scale | The image scale at which keypoints are computed. The scale can be chosen in three ways:

The following image scales can be selected:

|

Advanced: Matching Image Pairs | Defines how to select which image pairs to match. There are three ways to select them:

|

Advanced: Matching Strategy | Images are matched either using or not using the Geometrically Verified Matching. |

Advanced: Keypoint Extraction | The target number of keypoints to extract. The target number can be as follows:

|

Advanced: Calibration | Calibration parameters used:

|

Point Cloud Densification details

Processing Options

Image Scale | Image scale used for the point cloud densification. Displays also if multiscale is used. Image scale used for the point cloud densification:

|

Point Density | Point density of the densified point cloud. Can be as follows:

|

Minimum Number of Matches | The minimum number of matches per 3D point represents the minimum number of valid reprojections of this 3D point in the images. It can be 2–6. |

3D Textured Mesh Generation | Displays if the 3D textured mesh has been generated or not. |

3D Textured Mesh Settings | Displays the Processing Settings for the 3D textured mesh generation. Resolution: The selected the resolution for the 3D textured mesh generation. It can be as follows:

Color Balancing: It appears when the Color Balancing algorithm is selected for the generation of the texture of the 3D textured mesh. |

LOD | Displays if Level of Details were generated. |

Advanced: 3D Textured Mesh Settings: | Sample Density Divider: It can be between 1 and 5. |

Advanced: Matching Window Size | Size of the grid used to match the densified points in the original images. |

Advanced: Image Groups | Image groups for which a densified point cloud has been generated. One densified point cloud is generated per group of images. |

Advanced: Use Processing Area | Displays whether a processing area is taken into account. |

Advanced: Use Annotations | If annotations are taken into account or not, as selected in the processing options for the Point Cloud Densification step. |

Advanced: Limit Camera Depth Automatically | Displays whether the camera depth is automatically limited. |

Time for Point Cloud Densification | Time spent to generate the densified point cloud. |

Time for 3D Textured Mesh Generation | Time spent to generate the 3D textured mesh. Displays NA if no 3D textured mesh has been generated. |

Results

Number of Generated Tiles | Displays the number of tiles generated for the densified point cloud. |

Number of 3D Densified Points | Total number of 3D densified points obtained for the project. |

Average Density (per m3) | Average number of 3D densified points obtained for the project per square meter. |

DSM, Orthomosaic, and Index Details

Processing Options

DSM and Orthomosaic Resolution | The resolution used to generate the DSM and orthomosaic. If the mean GSD computed at step 1. Initial is used, its value is displayed. |

DSM Filters | Displays if the Noise Filtering is used as well as the Surface Smoothing. If the Surface Smoothing is used, its type is displayed as well. It can be as follows:

|

Raster DSM | Displays if the DSM is generated. Displays which method has been used to generate the DSM. It can be as follows:

Displays if the DSM tiles have been merged into one file. |

Orthomosaic | Displays if the orthomosaic is generated. Displays if the orthomosaic tiles have been merged into one file. |

Raster DTM | Displays the resolution at which it has been generated as well as if the reflectance map tiles have been merged into one file. |

DTM Resolution | Displays the resolution used to generate the DTM. |

Index Calculator: Indices | Displays if indices have been generated. Displays the list of generated indices. |

Contour Lines Generation | Displays if the contour lines are generated. Displays the values of the following parameters that have been used:

|

Index Calculator: Indices | Displays if indices have been generated. Displays the list of generated indices. |

Index Calculator: Index Values | Displays if the indices have been exported as Point Shapefile Grid Size or as Polygon Shapefile. Displays the grid size for the generated outputs. |

Time for DSM Generation | Time spent to generate the DSM. |

Time for Orthomosaic Generation | Time spent to generate the orthomosaic. |

Time for DTM Generation | Time spent to generate the DTM. |

Time for Contour Lines Generation | Time spent to generate the contour lines. |

Time for Reflectance Map Generation | Time spent to generate the reflectance map. |

Time for Index Map Generation | Time spent to generate the index map. |