You can use the HF Text Summarization model in the Transform Text Using Deep Learning tool available in the GeoAI toolbox in ArcGIS Pro. Follow the steps below to use the model for text summarization.

Summarize Text

Complete the following steps to summarize text:

- Download the HF Text Summarization pretrained model from ArcGIS Living Atlas of the World.

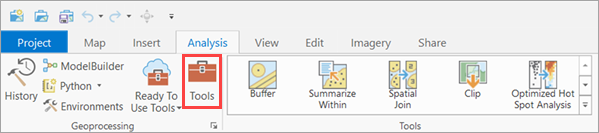

- Browse to Tools on the Analysis tab.

- Click the Toolboxes tab in the Geoprocessing pane, select GeoAI Tools, and browse to the Transform Text Using Deep Learning tool under Text Analysis.

- Set the variables on the Parameters tab as follows:

- Input Table —The input point, line, or polygon feature class, or table containing the text.

- Text Field —The text field within the input feature class or table that contains the text to be summarized.

- Input Model Definition File —Select the model .dlpk file.

- Result Field —The name of the field that will contain the summarized text in the output feature class or table. The default field name is Result.

- Arguments—Change the values of the arguments if

required.

- huggingface_id—The model ID of a pretrained text summarization model hosted on

huggingface.co.

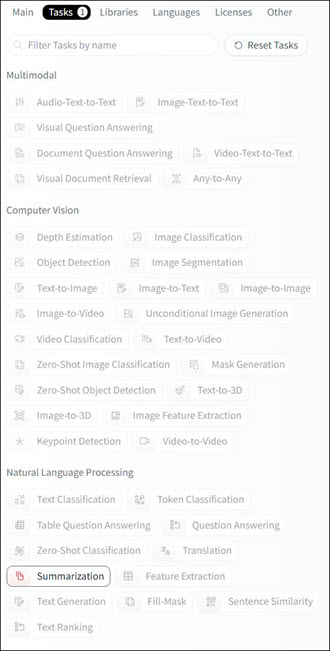

Text Summarization models can be filtered by selecting the Summarization tag under the Tasks section within the Natural Language Processing category on the Hugging Face model hub, as shown below:

The model ID follows the format {username}/{repository}, as displayed at the top of the model's page:

Only those models that have config.json are supported. This file can be verified under the Files and versions tab of the model page, as shown below:

- Minimum Sequence Length—The minimum number of characters in the generated summary. Default value: 10.

- Maximum Sequence Length—The maximum number of characters allowed in the generated summary. Default value: 512.

- num_beams—The number of beams used in beam search. Higher values can improve output quality by evaluating multiple possible sequences, but may increase inference time. Default value: 4.

- temperature—Controls the randomness of the generated output. Lower values produce more deterministic summaries, while higher values introduce more creativity. Default value: 1.

- do_sample —Specifies whether sampling is used during generation. If set to False, the model uses deterministic decoding methods such as greedy or beam search.

Note:

The summarization strategy depends on the combination of do_sample and num_beams values:

- Greedy Search (Deterministic): do_sample = False and num_beams = 1.

- Beam Search (Deterministic): do_sample = False and num_beams > 1.

- Sampling (Probabilistic/Creative): do_sample = True and temperature between 0 and 1.

- huggingface_id—The model ID of a pretrained text summarization model hosted on

huggingface.co.

- Batch Size

—The number of rows to be processed at once. Increasing the batch

size can improve tool performance; however, as the batch size

increases, more memory is used.

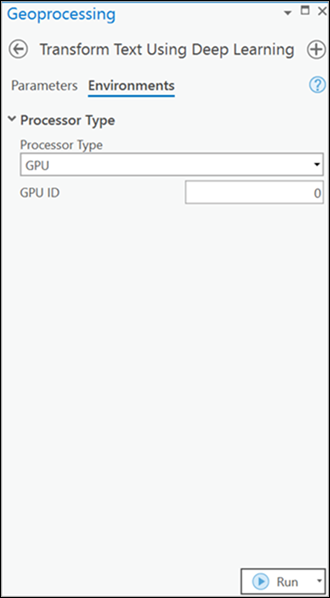

- Set the variables on the Environments tab as follows:

- Processor Type—Select CPU or GPU.

It is recommended that you select GPU, if available, and set GPU ID to specify the GPU to be used.

- Processor Type—Select CPU or GPU.

- Click Run.

The output column is added to the input table.