You can fine-tune the Address Standardization model to suit your geographic area. Fine-tuning a model requires less training data, computational resources, and time compared to training a new model.

Fine-tuning the model is recommended if you do not get satisfactory results from it. This can happen when it is applied in a geography that it wasn't trained on.

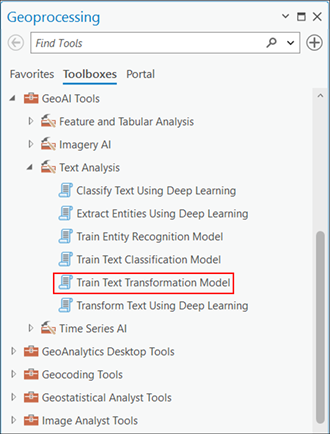

You can fine-tune this model in the Train Text Transformation Model tool available in the GeoAI toolbox in ArcGIS Pro.

Complete the following steps to fine-tune the model:

- Download the Address Standardization model from ArcGIS Living Atlas of the World.

- Browse to Tools on the Analysis tab.

- Click the Toolboxes tab in the Geoprocessing pane, select GeoAI Tools, and browse to theTrain Text Transformation Model tool under Text Analysis.

- Set the variables on the Parameters tab as follows:

- Input Table—The input point, line, or polygon feature class or table containing the text to be transformed and target transformed text for training the model.

- Text Field—The text field in the input feature class or table that contains the text to be transformed.

- Label Field—The text field in the input feature class or table that contains the target transformed text for training the model.

- Pretrained Model File—Select the pretrained Address Standardization .dlpk file.

- Output Model—The output folder location that will store the trained model.

- Max Epochs—100 (Depending on the number of iterations you want to fine-tune the model for. Epoch is the number of iterations the tool will take to go over the data.)

- Set the variables on the Model Parameters option as follows:

Model Backbone (optional)—Specifies the preconfigured neural network that will be used as the architecture for training the model.

- Batch Size—The number of rows to be processed at once. Increasing the batch size can improve tool performance; however, as the batch size increases, more memory is used.

- Sequence Length—Maximum sequence length (at subword level after tokenization) of the training data to be considered for training the model. The default value is 512. This is applicable only for models with HuggingFace transformer backbones.

- Use the Advanced options to make the results more accurate:

- Learning Rate (optional)—The step size indicating how much the model weights will be adjusted during the training process. If no value is specified, an optimal learning rate will be deduced automatically.

- Validation Percentage (optional)—The percentage of training samples that will be used for validating the model. The default value is 10.

- Stop when model stops improving (optional))—Specifies whether model training will stop when the model is no longer improving or until the Max Epochs value is reached.

- Remove HTML Tags (optional)—Specifies whether HTML tags will be removed from the input text.

- Remove URLs (optional))—Specifies whether URLs will removed from the input text.

- Set the variables on the Environments tab by selecting CPU or GPU for Processor Type.

It is recommended that you select GPU, if available, and set GPU ID to the GPU to be used.

- Click Run.

The output model is saved to the desired location.