Note:

ArcGIS Insights is deprecated and will be retiring in 2026. For information on the deprecation, see ArcGIS Insights deprecation.

Regression analysis is a technique that calculates the estimated relationship between a dependent variable and one or more explanatory variables. With regression analysis, you can model the relationship between the chosen variables as well as predict values based on the model.

Regression analysis uses a specified estimation method, a dependent variable, and one or more explanatory variables to create an equation that estimates values for the dependent variable.

The regression model includes outputs, such as R2 and p-values, to provide information on how well the model estimates the dependent variable.

Charts, such as scatter plot matrices, histograms, and point charts, can also be used in regression analysis to analyze relationships and test assumptions.

Regression analysis can be used to solve the following types of problems:

- Determine which explanatory variables are related to the dependent variable.

- Understand the relationship between the dependent and explanatory variables.

- Predict unknown values of the dependent variable.

Examples

The following are example scenarios for using regression analysis:

- An analyst for a small retail chain is studying the performance of different store locations. The analyst wants to know why some stores are having an unexpectedly low sales volume. The analyst creates a regression model with explanatory variables such as median age and income in the surrounding neighborhood, as well as distance to retail centers and public transit, to determine which variables are influencing sales.

- An analyst for a department of education is studying the effects of school breakfast programs. The analyst creates a regression model of educational attainment outcomes, such as graduation rate, using explanatory variables such as class size, household income, school budget per capita, and proportion of students eating breakfast daily. The equation of the model can be used to determine the relative effect of each variable on the educational attainment outcomes.

- An analyst for a nongovernmental organization is studying global greenhouse gas emissions. The analyst creates a regression model for the latest emissions for each country using explanatory variables such as gross domestic product (GDP), population, electricity production using fossil fuels, and vehicle usage. The model can then be used to predict future greenhouse gas emissions using forecasted GDP and population values.

Ordinary Least Squares

Regression analysis in ArcGIS Insights is modeled using the Ordinary Least Squares (OLS) method.

The OLS method is a form of multiple linear regression, meaning the relationship between the dependent variables and the independent variables must be modeled by fitting a linear equation to the observed data.

An OLS model uses the following equation:

yi=β0+β1x1+β2x2+...+βnxn+εwhere:

- yi=the observed value of the dependent variable at point i

- β0=the y-intercept (constant value)

- βn=the regression coefficient or slope for explanatory variable N at point i

- xn=the value of variable N at point i

- ε=the error of the regression equation

Assumptions

Each regression method has several assumptions that must be met for the equation to be considered reliable. The OLS assumptions should be validated when creating a regression model.

The assumptions described in the subsections below should be tested and met when using the OLS method.

Model must be linear

OLS regression can only be used to create a linear model. Linearity can be tested between the dependent variable and the explanatory variables using a scatter plot. A scatter plot matrix can test all the variables, provided there are no more than five variables in total.

Data must be randomly sampled

The data being used in regression analysis should be sampled in such a way that the samples are not dependent on any external factor. Random sampling can be tested using the residuals from the regression model. The residuals, which are an output from the regression model, should have no correlation when plotted against the explanatory variables on a scatter plot or scatter plot matrix.

Explanatory variables must not be collinear

Collinearity refers to a linear relationship between explanatory variables, which creates redundancy in the model. In some cases, the model can be created with collinearity. However, if one of the collinear variables seems to be dependent on the other, you may want to consider dropping that variable from the model. Collinearity can be tested using a scatter plot or scatter plot matrix of the explanatory variables.

Explanatory variables must have negligible error in measurement

A regression model is only as accurate as its input data. If the explanatory variables have large margins of error, the model cannot be accepted as accurate. When performing regression analysis, it is important to only use datasets from known and trusted sources to ensure that the error is negligible.

Residuals have an expected sum of zero

Residuals are the difference between observed and estimated values in a regression analysis. Observed values that fall above the regression curve will have a positive residual value, and observed values that fall below the regression curve will have a negative residual value. The regression curve should lie along the center of the data points, and the sum of residuals should be zero. The sum of a field can be calculated in a summary table.

Residuals have homogeneous variance

The variance should be the same for all residuals. This assumption can be tested using a scatter plot of the residuals (y-axis) and the estimated values (x-axis). The resulting scatter plot should appear as a horizontal band of randomly plotted points across the plot.

Residuals are normally distributed

A normal distribution, also called a bell curve, is a naturally occurring distribution in which the frequency of a phenomenon is high near the mean and tapers off as the distance from the mean increases. A normal distribution is often used as the null hypothesis in a statistical analysis. The residuals must be normally distributed to show that the line of best fit is optimized centrally within the observed data points, not skewed toward some and away from others. This assumption can be tested by creating a histogram with the residuals. The normal distribution curve can be overlaid and the skewness and kurtosis measures are reported on the back of the histogram card.

Adjacent residuals must not show autocorrelation

This assumption is based on time-ordered data. If the data is time ordered, each data point must be independent of the preceding or subsequent data point. It is important to ensure that the time-ordered data is organized in the correct order when performing a regression analysis. This assumption can be calculated using a Durbin-Watson test.

The Durbin-Watson test is a measure of autocorrelation in residuals in a regression model. The Durbin-Watson test uses a scale of 0 to 4, with values 0 to 2 indicating positive autocorrelation, 2 indicating no autocorrelation, and 2 to 4 indicating negative autocorrelation. Values near 2 are required to meet the assumption of no autocorrelation in the residuals. In general, values between 1.5 and 2.5 are considered acceptable, and values less than 1.5 or greater than 2.5 indicate that the model does not fit the assumption of no autocorrelation.

Model validity

The accuracy of a regression equation is an important part of regression analysis. All models will include an amount of error, but understanding the statistics will help you determine if the model can be used in the analysis, or if adjustments need to be made.

There are two techniques for determining the validity of a regression model: exploratory analysis and confirmatory analysis, which are described in the subsections below.

Exploratory analysis

Exploratory analysis is a method of understanding data using a variety of visual and statistical techniques. Throughout the course of an exploratory analysis, you will test the assumptions of OLS regression and compare the effectiveness of different explanatory variables. Exploratory analysis allows you to compare the effectiveness and accuracy of different models, but it does not determine whether you should use or reject a model. Exploratory analysis should be performed before confirmatory analysis for each regression model and reiterated to make comparisons between models.

The following charts and statistics can be used as part of an exploratory analysis:

- Scatter plot and scatter plot matrix

- Histogram and normal distribution

- Regression equation and predicting new observations

- Coefficient of determination, R2 and Adjusted R2

- Residual standard error

- Point chart

Exploratory analysis should begin while you are choosing explanatory variables and before you create a regression model. Since OLS is a method of linear regression, one of the main assumptions is that the model must be linear. A scatter plot or scatter plot matrix can be used to assess linearity between the dependent variable and the explanatory variables. A scatter plot matrix can display up to four explanatory variables along with the dependent variable, making it an important tool for large-scale comparisons between all variables. A single scatter plot only displays two variables: one dependent and one independent or explanatory. Viewing a scatter plot of the dependent variable and a single explanatory variable allows you to make a more acute assessment of the relationship between the variables. Linearity can be tested before you create a regression model to help determine which explanatory variables will create an acceptable model.

Several statistical outputs are available after you create a regression model, including the regression equation, R2 value, and Durbin-Watson test. Once you've created a regression model, you should use the outputs and necessary charts and tables to test the remaining assumptions of OLS regression. If the model meets the assumptions, you can continue with the remaining exploratory analysis.

The regression equation provides valuable information about the influence of each explanatory variable on the predicted values, including the regression coefficient for each explanatory variable. The slope values can be compared to determine the relative influence of each explanatory variable on the dependent variable; the further the slope value is from zero (either positive or negative), the larger the influence. The regression equation can also be used to predict values for the dependent variable by entering values for each explanatory variable.

The coefficient of determination, symbolized as R2, measures how well the regression equation models the actual data points. The R2 value is a number between 0 and 1, with values closer to 1 indicating more accurate models. An R2 value of 1 indicates a perfect model, which is highly unlikely in real-world situations given the complexity of interactions between different factors and unknown variables. You should strive to create a regression model with the highest R2 value possible, while recognizing that the value may not be close to 1.

When performing regression analysis, there is a risk of creating a regression model that has an acceptable R2 value by adding explanatory variables that cause a better fit based on chance alone. The adjusted R2 value, which is also a value between 0 and 1, accounts for additional explanatory variables, reducing the role that chance plays in the calculation. An adjusted R2 value should be used for models using many explanatory variables, or when comparing models with different numbers of explanatory variables.

The residual standard error measures the accuracy with which the regression model can predict values with new data. Smaller values indicate a more accurate model; when multiple models are compared, the model with the smallest value will be the model that minimizes residual standard error.

Point charts can be used to analyze explanatory variables for patterns such as clustering and outliers, which may affect the accuracy of the model.

Confirmatory analysis

Confirmatory analysis is the process of testing a model against a null hypothesis. In regression analysis, the null hypothesis is that there is no relationship between the dependent variable and the explanatory variables. A model with no relationship would have slope values of 0. If the elements of the confirmatory analysis are statistically significant, you can reject the null hypothesis (in other words, statistical significance indicates that a relationship does exist between the dependent and explanatory variables).

The following statistical outputs are used to determine significance as part of confirmatory analysis:

- F statistic and its associated p-value

- t statistics and their associated p-values

- Confidence intervals

The F statistic is a global statistic returned from an F-test, which indicates the predictive capability of the regression model by determining if all the regression coefficients in the model are significantly different from 0. The F-test analyzes the combined influence of the explanatory variables, rather than testing the explanatory variables individually. The F statistic has an associated p-value, which indicates the probability that the relationships in the data are happening by chance. Since p-values are based on probabilities, the values are given on a scale from 0.0 to 1.0. A small p-value, usually 0.05 or less, is required to determine that the relationships in the model are real (in other words, not happening by chance) and to reject the null hypothesis. In that case, the probability that the relationships in the model are happening by chance is 0.05, or 1 in 20. Alternatively, the probability that the relationships are real is 0.95, or 19 in 20.

The t statistic is a local statistic returned from a t-test, which indicates the predictive capability of each explanatory variable individually. As with the F-test, the t-test analyzes if the regression coefficients in the model are significantly different from zero. However, since a t-test is performed on each explanatory variable, the model will return a t statistic value for each explanatory variable, rather than one per model. Each t statistic has an associated p-value, which indicates the significance of the explanatory variable. As with the p-values for the F-test, the p-value for each t-test should be 0.05 or less to reject the null hypothesis. If an explanatory variable has a p-value greater than 0.05, the variable should be discarded and a new model should be created, even if the global p-value was significant.

Confidence intervals show the regression coefficient for each explanatory variable and the associated 90, 95, and 99 percent confidence intervals. The confidence intervals can be used alongside the p-values from the t-tests to assess the null hypothesis for individual explanatory variables. The regression coefficients must not be equal to 0 if you are to reject the null hypothesis and continue using the model. For each explanatory variable, the regression coefficient and the associated confidence intervals should not overlap with 0. If a 99 or 95 percent confidence interval for a given explanatory variable overlaps with 0, the explanatory variable has failed to reject the null hypothesis. Including such a variable in the model may have an effect on the overall significance of the model. If only the 90 percent confidence interval overlaps with 0, the explanatory variable may be included in the model provided the other global statistics are significant. Ideally, the confidence intervals for all explanatory variables should be far from 0.

Other outputs

Other outputs, such as estimated values and residuals, are important for testing the assumptions of OLS regression. How these values are calculated is described in the subsections below.

Estimated values

The estimated values are calculated using the regression equation and the values for each explanatory variable. Ideally, the estimated values would be equal to the observed values (in other words, the actual values of the dependent variable).

Estimated values are used with the observed values to calculate residuals.

Residuals

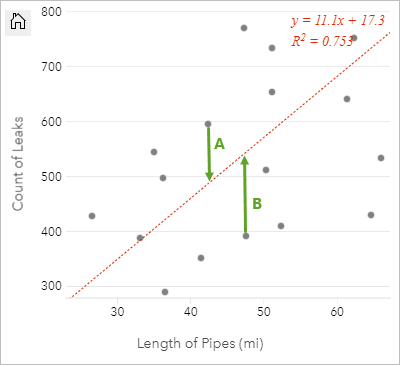

The residual values in a regression analysis are the differences between the observed values in the dataset and the estimated values calculated with the regression equation.

The residuals A and B for the relationship above would be calculated as follows:

residualsA = observedA - estimatedA

residualsA = 595 - 487.62

residualsA = 107.38residualsB = observedB - estimatedB

residualsB = 392 - 527.27

residualsB = -135.27Residuals can be used to calculate error in a regression equation as well as to test several assumptions.