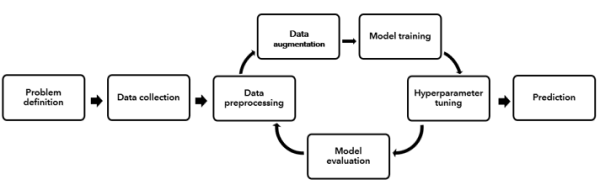

A typical deep learning (DL) project workflow begins with identifying the business problem and formulating the problem statement or question. This is followed by a series of steps, including data preparation (or preprocessing), model training, hyperparameter tuning, and model evaluation. This is an iterative process and the optimal model is often only reached after multiple iterations and experiments.

Identifying the model that best fits the data takes a lot of time, effort, and expertise in the entire DL process. The Train Using AutoDL tool automates this entire workflow and identifies the best neural networks with the best set of hyperparameters that fit the data. The sections below describe each of the steps in the DL process in more detail.

Train using AutoDL workflow

The Train Using AutoDL tool automates the following:

- Data augmentation—Successful DL projects require huge amounts of high-quality input data to addresses a specific problem. However, in reality it is difficult to obtain classified or labelled data in large amounts. Data augmentation techniques are applied in order to increase the amount of data as well as diversity in data such that it resembles to the real-world data. Data augmentation can involve geometric transformations, flipping, cropping, translation, and noise injection and so on, all of which may require a substantial level of time and effort from the DL practitioner. This step is typically time-consuming and tedious and may require detailed, domain-specific knowledge and experience.

- Automatic batch size deduction—The output from the Export Training Data Using Deep Learning tool is a folder containing a deep learning training dataset. This exported data contains a large amount of images, which must be sent in batches based on the compute resources available. The tool automates the process of calculating the optimum batch size for training the deep learning model based on the available resources.

- Model training and model selection—In the model training step, the DL practitioner chooses the appropriate DL network based on the problem and the characteristics of the data. They then begin the iterative process of training models to fit to the data, which often includes experimenting with several different DL neural networks. Each of these algorithms may have many different hyperparameters, which are values specified manually by the DL practitioner and which control how the model learns. These hyperparameters are then tuned (in other words, adjusted) in an effort to improve the performance of the algorithm and achieve better results. This is an iterative process that requires the time and expertise of the DL practitioner. The various neural network models include object detection models such as SingleShotDetector and RetinaNet, YoloV3, FasterRCNN, and MMDetection. Pixel classification models such as UnetClassifier, PSPNetClassifier, DeepLab, and MMSegmentation models work better on one or another data. It is difficult to predict which models will work well on a given dataset, so you must try all of the models to compare their performance before deciding on the model that best fits the data.

- Hyperparameter tuning—Although most of the previous steps were iterative, the step that is often the most difficult while training machine learning models is hyperparameter tuning.

Hyperparameters can be considered as levers that come with each model. The hyperparameters that are used in training the model include deducing a learning rate and suitable backbone.

- Model evaluation—The final step in the DL workflow is model evaluation, in which you validate that the trained and tuned DL neural networks will generalize well to data that it was not fitted on. This unseen data is often referred to as the validation or test set and is kept separate from the remainder of data that is used to train the model. The goal of this final step is to ensure that the DL networks produce acceptable predictive accuracy on new data.

In a DL workflow, there are varying degrees of human input, decision making, and choice occurring at every step.

- Was the appropriate data collected to address the problem, and is there enough of it?

- What signifies background class in the context of the data?

- If mislabeled data is found, what should replace it?

- How many epochs should the DL model be trained for?

- Which DL neural network should be used?

- What is an acceptable level of performance for the model?

- What is the best combination of hyperparameters for a given model?

This last decision can potentially involve hundreds or even thousands of combinations of hyperparameters that can be iterated over. If you train and tune many DL networks, the entire process starts to become unmanageable and unproductive. Additionally, several of the steps in the DL workflow require expert technical understanding of data science techniques, statistics, and deep learning algorithms. As such, designing and running DL projects can be time consuming, labor intensive, costly, and often highly dependent on trained DL practitioners and data scientists.

In the past decade, deep learning has experienced rapid growth in both the range of applications it is applied to and the amount of new research produced on it. Some of the largest driving forces behind this growth are the maturity of the DL algorithms and methods themselves, the generation and proliferation of massive volumes of data for the algorithms to learn from, the abundance of inexpensive compute to run the algorithms, and the increasing awareness among businesses that DL algorithms can address complex data structures and problems.

Many organizations want to use DL to take advantage of their data and derive actionable new insights from it, but there is an imbalance between the number of potential DL applications and the amount of trained, expert DL practitioners to address them. As a result, there is an increasing demand to democratize DL across organizations by creating tools that make DL widely accessible throughout the organization and can be used off the shelf by non-DL experts and domain experts.

Recently, Automated Deep Learning (AutoDL) has emerged as way to address the massive demand for DL within organizations across all experience and skill levels. AutoDL aims to create a single system to automate (in other words, remove human input from) as much of the DL workflow as possible, including data preparation, data augmentation, model selection, hyperparameter tuning, and model evaluation. In doing so, it can be beneficial to nonexperts by lowering their barrier of entry into DL, but also to trained DL practitioners by eliminating some of the most tedious and time-consuming steps in the DL workflow.

AutoDL for the non-DL expert (GIS analysts, business analysts, or data analysts who are domain experts)—The main advantage of using AutoDL is that it eliminates some of the steps in the DL workflow that require the most technical expertise and understanding. Analysts who are domain experts can define their business problem and collect the appropriate data, and then let the computer learn to do the rest. They don’t need a deep understanding of data science techniques for data cleaning and augmentation, they don’t need to know what all the DL neural networks do, and they don’t need to spend time exploring different networks and hyperparameter configurations. Instead, these analysts can focus on applying their domain expertise to a specific business problem or domain application, rather than on the DL workflow itself. Additionally, they can be less dependent on trained data scientists and DL engineers in their organization because they can build and use advanced models on their own, often without any coding experience required.

AutoDL for the DL expert (data scientists or DL engineers)—AutoDL can also be highly beneficial to DL experts; however, the reasons may be less obvious. For one, DL experts do not have to spend as much time supporting the domain experts in their organization and can therefore focus on their own, more advanced DL work. When it comes to the DL experts’ DL projects, AutoDL can be a significant time saver and productivity booster. Many of the time-consuming steps in the DL workflow—such as data augmentation, model selection, and hyperparameter tuning—can be automated. The time saved by automating many of these repetitive, exploratory steps can be shifted to more advanced technical tasks or to tasks that require more human input (for example, collaborating with domain experts, understanding the business problem, or interpreting the DL results).

In addition to its time-saving aspects, AutoDL can also help boost the productivity of DL practitioners because it eliminates some of the subjective choice and experimentation involved in the DL workflow. For example, a DL practitioner approaching a new project may have the training and expertise to guide them on which new features to construct, which DL network is the best for a particular problem, and which hyperparameters are most optimal. However, they may overlook the construction of certain new features or fail to try all the possible combinations of hyperparameters while they are performing the DL workflow. Additionally, the DL practitioner may bias the feature selection process or choice of algorithm because they prefer a particular DL network based on their previous work or its success in other DL applications they’ve seen. In reality, no single DL algorithm performs best on all datasets; some DL algorithms are more sensitive than others to the selection of hyperparameters, and many business problems have varying degrees of complexity and requirements for interpretability from the DL algorithms used to solve them. AutoDL can help reduce some of this human bias by applying many different DL networks to the same dataset and then determining which one performs best.

For the DL practitioner, AutoDL can also serve as an initial starting point or benchmark in an DL project. They can use it to automatically develop a baseline model for a dataset, which can give them a set of preliminary insights into a particular problem. From here, they may decide to add or remove specific features from the input dataset, or focus on a specific DL network and fine-tune its hyperparameters. In this sense, AutoDL can be viewed as a means of narrowing down the set of initial choices for a trained DL practitioner, so they can focus on improving the performance of the DL system overall. This is a very commonly used workflow in practice, in which DL experts will develop a data-driven benchmark using AutoDL and then build on this benchmark by incorporating their expertise to refine the results.

In the end, democratizing DL via AutoDL in an organization allows domain experts to focus their attention on the business problem and obtain actionable results, allows more analysts to build better models, and can reduce the number of DL experts that the organization needs to hire. It can also help boost the productivity of trained DL practitioners and data scientists, allowing them to focus their expertise on the multitude of other tasks where it is needed most.

Interpret the output reports

The Train Using AutoDL tool generates trained deep learning packages (.dlpk) and also displays a leaderboard as part of the tool output window.

The leaderboard shows the evaluated models and their metric value. In this case of the object detection problem, the model with the highest average precision score is considered the best model whereas in case of pixel classification problem, the model with highest accuracy is considered the best.