Decision trees is a type of supervised machine learning algorithm that is used by the Train Using AutoML tool and classifies or regresses the data using true or false answers to certain questions. The resulting structure, when visualized, is in the form of a tree with different types of nodes—root, internal, and leaf. The root node is the starting place for the decision tree, which then branches to internal nodes and leaf nodes. The leaf nodes are the final classification categories or real values. Decision trees are easy to understand and are explainable.

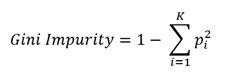

To construct a decision tree, start by specifying a feature that will become the root node. Typically, no single feature can perfectly predict the final classes; this is called impurity. Methods such as Gini, entropy, and information gain are used to measure this impurity and identify how well a feature classifies the given data. The feature with the least impurity is selected as the node at any level. To calculate Gini impurity for a feature with numerical values, first sort the data in ascending order and calculate the averages of the adjoining values. Then, calculate the Gini impurity at each selected average value by arranging the data points based on whether the feature values are less than or greater than the selected value and whether that selection correctly classifies the data. The Gini impurity is then calculated using the equation below, where K is the number of classification categories and p is the proportion of instances of those categories.

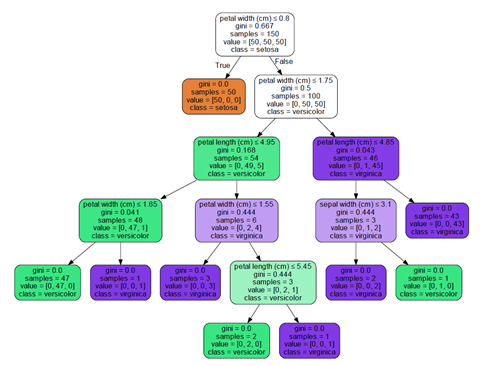

The weighted average of the Gini impurities for the leaves at each value is calculated. The value with the least impurity is selected for that feature. The process is repeated for different features to select the feature and value that will become the node. This process is iterated at every node at each depth level until all the data is classified. Once the tree is constructed, to make a prediction for a data point, go down the tree using the conditions at each node to arrive at the final value or classification. When using decision trees for regression, the sum of squared residuals or variance is used to measure the impurity instead of Gini. The rest of the method follows similar steps.

In the following example, a decision tree that classifies flowers based on the width and height of petals and sepals is shown:

Additional Resources

Quinlan, J. R. C4.5: Programs for Machine Learning. Morgan Kaufmann Publishers, 1993.