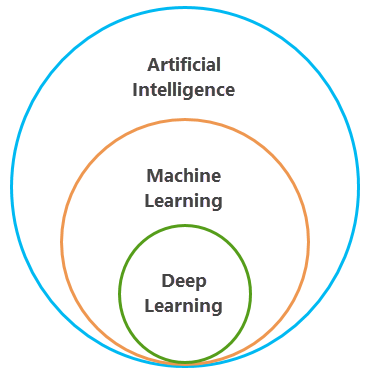

Machine learning tools have been a core component of spatial analysis in GIS for decades. You have been able to use machine learning in ArcGIS to perform image classification, enrich data with clustering, or model spatial relationships. Machine learning is a branch of artificial intelligence in which structured data is processed with an algorithm to solve a problem. Traditional structured data requires a person to label the data, such as a pictures of cats and dogs, so that specific features for each animal type can be understood within the algorithm and used to identify these animals in other pictures.

Deep learning is a subset of machine learning that uses several layers of algorithms in the form of neural networks. Input data is analyzed through different layers of the network, with each layer defining specific features and patterns in the data. For example, if you want to identify features such as buildings and roads, the deep learning model can be trained with images of different buildings and roads, processing the images through layers within the neural network, and then finding the identifiers required to classify a building or road.

Esri has developed tools and workflows to utilize the latest innovations in deep learning to answer some of the challenging questions in GIS and remote sensing applications. Computer vision, or the ability of computers to gain understanding from digital images or videos, is an area that has been shifting from the traditional machine learning algorithms to deep learning methods. Before applying deep learning to imagery in AllSource, it is important to understand the different applications of deep learning for computer vision.

Applications of deep learning for computer vision

There are many computer vision tasks that can be accomplished with deep learning neural networks. Esri has developed tools that allow you to perform image classification, object detection, semantic segmentation, and instance segmentation. All of these computer vision tasks are described below, each with a remote sensing example and a more general computer vision example.

Image classification

Image classification involves assigning a label or class to a digital image. For example, the drone image on the left below might be labeled crowd, and the digital photo on the right might be labeled cat. This type of classification is also known as object classification or image recognition, and it can be used in GIS to categorize features in an image.

Object detection

Object detection is the process of locating features in an image. For example, in the remote sensing image below, the neural network found the location of an airplane. In a more general computer vision use case, a model may be able to detect the location of different animals. This process typically involves drawing a bounding box around the features of interest. It can be used in GIS to locate specific features in satellite, aerial, or drone imagery and to plot those features on a map.

Semantic segmentation

Semantic segmentation occurs when each pixel in an image is classified as belonging to a class. For example, in the image on the left below, road pixels are classified separately from nonroad pixels. On the right, pixels that make up a cat in a photo are classified as cat, while the other pixels in the image belong to other classes. In GIS, this is often referred to as pixel classification, image segmentation, or image classification. It is often used to create land-use classification maps.

Instance segmentation

Instance segmentation is a more precise object detection method in which the boundary of each object instance is drawn. For example, in the image on the left below, the roofs of houses are detected, including the precise outline of the roof shape. On the right, cars are detected, and you can see the distinct shape of the cars. This type of deep learning application is also known as object segmentation.

Panoptic segmentation

Panoptic segmentation combines both semantic segmentation and instance segmentation. For example, the image below shows all the pixels are classified, and each unique object, such as each car, is its own unique object.

Image translation

Image translation is the task of translating an image from one possible representation or style of the scene to another, such as noise reduction or super-resolution. For example, the image on the left below shows the original low-resolution image, and the image on the right shows the result of using a super-resolution model. This type of deep learning application is also known as image-to-image translation.

Change detection

Change detection deep learning tasks can detect changes in features of interest between two dates and generate a logical map of change. For example, the image on the left below shows a housing development from five years ago, the middle image shows the same development today, and the image on the right shows the logical change map where new homes are in white.