The Removes duplicate tool removes duplicate records based on one or more key fields. The output is a new dataset with no duplicate records.

Examples

The Remove duplicates tool can be used in scenarios such as the following:

- You have location data containing records that have the same values for time, latitude, and longitude. Specify the fields containing those values to remove the duplicate location.

- Your transaction data incorrectly stores the same transaction multiple times. Remove the duplicate records based on the transaction ID field to gain a more accurate understanding of your sales.

Parameters

The following table outlines the parameters used in the Remove duplicates tool:

| Parameter | Description |

|---|---|

Input dataset | The dataset containing the duplicate records. |

Key fields | A list of one or more fields that identify unique records. |

Usage notes

Use the Input dataset parameter to identify the dataset containing duplicate records.

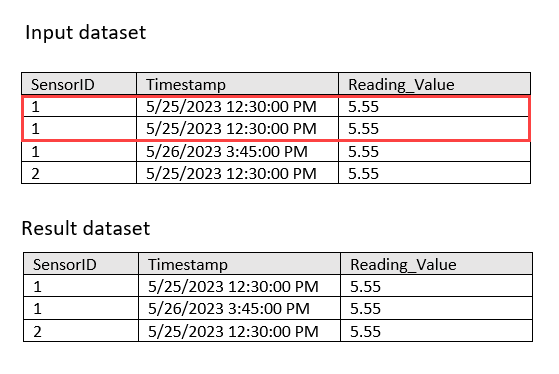

Use the Key fields parameter to specify one or more fields that indicate the record is unique. If the dataset contains a single unique identifier field, you can use it to remove duplicates. Alternatively, you can specify a combination of fields to identify unique records. For example, if you have data for sensor readings, you can specify the fields containing the time stamp, ID, and reading value to remove duplicates of the same reading.

The duplicate records that are removed may not be the first occurrences of the record in the input dataset. For example, if the first three records of the input dataset are duplicates, the first and third record may be removed and the second record may be maintained.

Outputs

The tool outputs a dataset with no duplicate records.

Licensing requirements

The following licensing and configurations are required:

- Creator or GIS Professional user type

- Publisher, Facilitator, or Administrator role, or an equivalent custom role

To learn more about Data Pipelines requirements, see Requirements.